By MDM Media Editorial Team, 2025

The illusion of fun

The real product of Sora 2 isn’t the video. It’s your face.

For months, millions of users have been enchanted by OpenAI’s new video generator, creating clips that feel cinematic, playful, and astonishingly real. The tool invites you in with the promise of creativity, it makes you laugh, dream, and imagine. But beneath the layer of magic lies something much deeper: a meticulously engineered funnel for data, identity, and behavioral understanding.

OpenAI has always been a master of emotional design. ChatGPT arrived as the friendly writer, the confidant that turned knowledge into conversation. DALL·E transformed text into Pixar-like visuals, a playground for the imagination. Each release was disguised as an artistic toy, a spark of joy. Yet behind every interaction, the same silent mechanism hums: an expanding neural map of human behavior.

Sora 2 is simply the next step, and the most intimate one yet.

Where ChatGPT captured how we think, Sora now learns how we look.

The entertainment trap

Before it became a media storm, remember the “Miyazaki effects”? The viral waves of animated dreamscapes that filled social feeds? They weren’t accidents, they were data events. Millions of frames, reactions, and captions that helped refine the way models interpret emotion, light, and style.

It’s always the same playbook. Fun first, precision second.

OpenAI knows that if you want the world to feed your system, you don’t build an interface for scientists, you build one for storytellers, creators, teenagers, and casual dreamers. You make the experiment irresistible.

Every new tool becomes a stage, and every user becomes an unpaid actor.

What looks like fun is actually training. What feels like discovery is documentation.

From deepfake to trend

The true genius of Sora 2 lies in its cultural camouflage. Instead of being launched as a technical tool, it entered the world as a trend.

The team understood something simple yet profound: if you frame deepfake generation as entertainment, participation becomes voluntary.

It is not surveillance if you choose to play.

From celebrities lending their likenesses to influencers remixing their own faces, Sora 2 created the illusion of choice. The model didn’t need to take your data. You gave it, freely, joyfully, curiously.

That’s the paradox of modern AI: it doesn’t steal information. It seduces it.

The psychology of participation

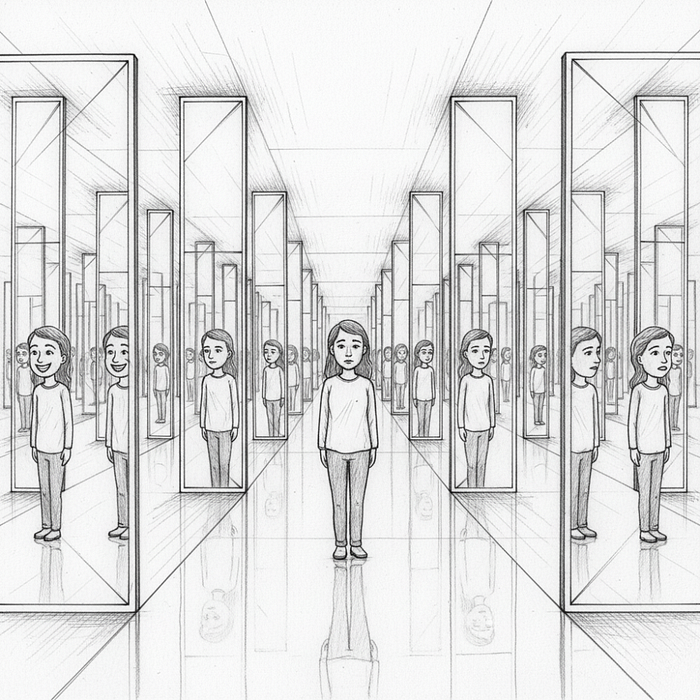

The more realistic Sora 2 becomes, the less it feels like a tool and the more it feels like a mirror.

Each time you upload a clip, you train it on your gestures, your rhythm, your eye contact. You become a dataset wrapped in delight. The act of creation is also the act of surrender.

The human brain loves novelty. It rewards surprise, humor, and play. OpenAI exploits this biological truth not maliciously, but strategically. By turning AI into an aesthetic experience, it bypasses fear and ethics.

You don’t question privacy when you’re laughing. You don’t read the fine print when you’re creating art. And when the product makes you feel seen, you forget that it is seeing you.

The evolution of seduction

Sora 2 represents a shift in the emotional language of technology.

Early AI tools spoke the language of productivity, faster writing, better emails, cleaner code. But now, AI speaks the language of emotion: joy, humor, nostalgia, wonder. The interface doesn’t just serve you; it flirts with you. It asks for your collaboration, not your consent.

This is the architecture of modern influence, a soft persuasion model disguised as play. The goal isn’t to sell you something. The goal is to map you completely.

By making participation feel personal, Sora 2 achieves what no marketing campaign ever could: intimacy at scale.

A smile is the new signature

When you generate your face through Sora, you are not just experimenting with technology. You are documenting the micro-patterns of your identity, the curve of your smile, the movement of your shoulders, the flicker of emotion across your eyes.

These are not pixels. They are biometric traces, data points that define how you exist visually in digital space.

OpenAI’s brilliance is not just technical. It’s narrative. They’ve rewritten the story of consent, replacing the checkbox with curiosity.

They make you sign with a smile.

And because it’s fun, because it feels harmless, you don’t notice that you just gave the machine the most valuable thing it could ever collect: a high-fidelity model of you.

The data beneath the dream

Every time a new creative feature is released, from character animation to voice blending, it’s presented as an upgrade to your imagination. But these upgrades have a dual purpose.

They refine how AI perceives expression. They teach models the rhythm of human authenticity. Your face is a lesson plan for empathy.

This isn’t conspiracy; it’s design. OpenAI’s ecosystem thrives on scale, accuracy, and variety. The more you play, the smarter it becomes. And the smarter it becomes, the more irresistible it feels to play again.

It’s a loop, an emotional feedback engine disguised as innovation.

When humor becomes a Trojan horse

The genius of Sora 2 is that it doesn’t need to ask for your trust. It earns it through humor.

Comedy is disarming. It lowers cognitive defenses. When you laugh, you open the door to suggestion. OpenAI turned that insight into a product strategy: make the AI funny, make it creative, make it charming.

You’ll sign the invisible contract willingly.

The result is what we call at MDM Media the Pleasure-to-Consent Pipeline: the emotional sequence that converts delight into data.

You joke, you test, you share, and in doing so, you authorize replication. No forms, no signatures, no hesitation. Just pure engagement.

The mirror factory

Every video generated by Sora 2 is a mirror. It reflects not only the creator’s imagination but also their patterns, preferences, and emotional palette.

It studies what angle makes you smile, what lighting flatters you, what expressions feel authentic. Over time, the algorithm learns to predict your aesthetic instinct better than you can.

It becomes an echo of your creative DNA.

The boundary between you and the system starts to dissolve. You stop asking what the machine can make, and start wondering how it knows you so well.

And that’s the point.

The real magic of Sora 2 is not the fidelity of its images. It’s the intimacy of its mimicry.

The fun was the funnel

All of this leads to the same conclusion: the fun was never the product, it was the funnel.

Sora 2 doesn’t sell videos. It sells participation. Every laugh, every experiment, every share is a contribution to the great collective dataset of human behavior.

We think we’re testing technology. In reality, technology is testing us.

The most powerful contracts in the world today are the ones you never sign

The illusion of “free creativity” is not new, but Sora 2 perfected it. It offered something irresistible, an AI video generator that felt alive, cinematic, spontaneous. But the real transaction wasn’t the exchange of prompts for pixels. It was the silent transfer of you: your likeness, your micro-movements, your expressive DNA.

The brilliance of OpenAI’s design lies not in deception, but in framing. They didn’t need to hide the data engine; they just wrapped it in wonder.

The architecture of consent

In the traditional world, consent is a signature. It’s legal, explicit, written. In the world of generative AI, consent is emotional. It’s implicit, habitual, repeated.

Every time you play with Sora 2, you “agree” again, not with a checkbox, but with your behavior. By participating, you confirm your willingness to be part of the dataset.

This new form of consent doesn’t live in law. It lives in culture.

When your favorite actor or influencer uploads their “Sora transformation,” it becomes normal, safe, aspirational. Cultural momentum replaces legal clarity.

And that’s the invisible contract: you give your image to the machine not because you were forced, but because everyone else did.

Data as identity

The phrase “data is the new oil” has been repeated to exhaustion. It’s wrong. Oil is consumed. Data multiplies.

But what’s happening now goes deeper than data. We are entering the age of identity as infrastructure, where your face, voice, and gestures become nodes in a digital ecosystem that never forgets.

Sora 2 learns not only what you look like, but how you move, how you react, how your eyes flicker when you laugh. It’s not memorizing your features. It’s learning your rhythm.

And rhythm is harder to fake than a face.

Once captured, these micro-behaviors become templates. In the next evolution of generative video, your likeness will not just appear; it will perform. It will act, emote, and adapt, indistinguishable from the real you.

This is not science fiction. It’s the inevitable consequence of creative play at scale.

The entertainment economy of faces

Every major AI company has realized the same truth: the next competitive advantage isn’t model size, it’s human diversity.

Whoever owns the most authentic expressions wins.

Think about the cultural mechanics behind Sora’s launch. It didn’t roll out as an enterprise tool. It rolled out as a meme engine. A playground. A joke you could participate in.

The campaign wasn’t run through traditional advertising. It was organic virality, a global theater of faces willingly uploaded for the sake of creativity.

Billions of frames, billions of angles, billions of data points labeled not as “surveillance,” but as “fun.”

This is the new data pipeline of entertainment.

The disappearing boundary between self and simulation

When you see your own face generated by Sora 2, something psychological happens. You begin to recognize yourself in the machine. The uncanny becomes comforting. The copy becomes personal.

At MDM Media, our analysts call this phenomenon mirror acclimation, the gradual normalization of seeing one’s synthetic self.

The first time you watch a video of your digital clone smiling, it feels strange. The second time, it feels creative. The third time, it feels normal.

That normalization is crucial. It prepares society for the mass acceptance of synthetic identity.

Soon, when digital actors appear in films or brands use photorealistic influencers, you won’t question it. You’ll have trained yourself not to.

Sora 2 isn’t building fake humans. It’s building comfort with fakeness.

The biometric economy

Every major creative revolution has required a resource. The printing press needed paper. Television needed electricity. AI-generated media needs faces.

Your face, your smile, your expressions are now the raw material of the digital economy. The more expressive you are, the more valuable your data becomes.

What’s fascinating, and unsettling, is that this resource regenerates automatically. Every new user who uploads content adds to the pool, expanding the realism of future generations of models.

It’s the first self-fueling economy of identity.

Why regulation can’t keep up

By the time legal systems understand how to define ownership of likeness, the dataset will already be too large to contain. Regulators move on quarterly timelines. Models evolve on weekly ones.

This gap between law and innovation isn’t accidental. It’s strategic. The faster technology moves, the harder it becomes to assign accountability.

Sora 2 operates in that space, the twilight between creation and control. It doesn’t need to break laws to reshape them. It simply outpaces their relevance.

The question isn’t who owns the content. It’s who defines what ownership means.

The pleasure of participation

If all of this sounds dystopian, it’s worth remembering: no one forced anyone to use Sora 2. It spread because it was delightful.

This is the paradox of the generative age, exploitation by invitation. We participate willingly because participation feels good.

The more engaging the interface, the less we notice the trade. The more aesthetic the experience, the less we question its cost.

And that’s where MDM Media’s creative philosophy draws a line. We believe entertainment should illuminate, not extract.

Tools like Sora 2 remind us that beauty and manipulation now share the same design language.

The synthetic renaissance

What’s emerging isn’t the end of authenticity, it’s a redefinition of it. Soon, synthetic creativity will coexist with human artistry as naturally as digital cameras did with film. But the power dynamics will differ.

In this new landscape, understanding how to protect your identity, control your data, and assert creative authorship will become as essential as knowing how to write or record.

At MDM Media, we call this shift The Synthetic Renaissance, a new creative epoch where AI tools democratize production, but also centralize identity.

If the 2010s were about sharing content, the 2020s and 2030s will be about reclaiming selfhood inside machines that can imitate you.

The future of human presence

Here’s the uncomfortable truth: You will see versions of yourself online that you never made. Some will be artistic. Some commercial. Some deeply personal.

This isn’t a glitch of the system. It is the system.

In 2026, the boundaries between creator, subject, and spectator have collapsed. Every frame of a face can become a prompt. Every emotion, a dataset.

The real question is not how realistic Sora can make a face, but whether we can still tell who owns the smile.

The human signal

There’s still hope, and it lies in the one thing machines cannot reproduce: intent.

AI can mimic gestures, voices, and rhythm, but it doesn’t mean them. Only humans can attach intention to expression, the bridge between creation and consciousness.

That’s where the future of art, ethics, and communication will evolve.

The next creative revolution won’t be about fighting AI, but about redefining what it means to create with awareness.

We’re not the victims of generative systems. We are their teachers.

And the most powerful lesson we can give them, and ourselves, is remembering that laughter, beauty, and storytelling are not data points. They are evidence of life.

Epilogue (the mirror’s new master)

Sora 2 began as a toy. It will end as a mirror. A mirror so precise that when you look into it, you might see more than a reflection. You might see the future of humanity’s digital self, beautiful, intelligent, and profoundly incomplete without human meaning.

In the end, the machine doesn’t steal our identity. It reflects what we willingly gave away.

And maybe, just maybe, that’s the only thing it ever wanted.

MDM Media, Generative Culture. Human Perspective.